What’s the ‘best’ file compression method?

I’ve been doing a little bit of research into the best compression method to use in Ubuntu. There’s so many compression types when I right click –> create archive. Keep in mind that I’m simply using the default compression values right from the desktop, there’s no special tweaking involved here. Mainly, I want something quick and easy for every day file management. Many of these formats, I’ve seen before and had heard some were ‘better than others’; some faster, and some compress more. I really wasn’t sure what’s the best option.

I often have large data files (hudreds of megs or larger) that I like to make backup copy of, but would rather store them in a compressed format. I also don’t want to wait around forever to get the job done. I’m working on a fast machine (duo core 2) with a gig of memory.

So what’s gave me the ‘best’ results when compressing my 1.4 gb file? It’s a virtualbox .vdi file, essentially the entire HD for a windows 2000 virtual machine. The results could be very different on a collection of lots of small little files… something I might test later. In the mean time there’s a good study already published here.

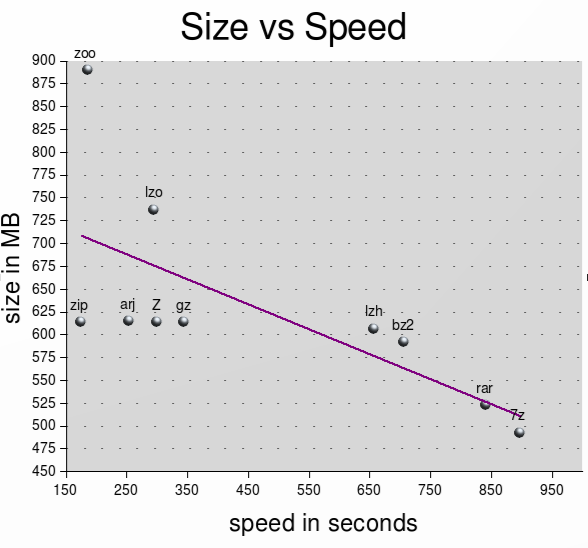

Here’s my test results of several different formats on the file:

Best compression – size in MB.

- 7z 493

- rar 523

- bz2 592

- lzh 607

- gz 614

- Z 614

- zip 614

- .arj 615

- lzo 737

- zoo 890

Best Time in seconds

- zip 175

- zoo 186

- arj 253

- lzo 295

- Z 300

- gz 344

- lzh 657

- bz2 706

- rar 840

- 7z 896

Conclusions:

It came as a surprise to me that plain old .zip to which I am quite familiar with already, is still a better choice for high compression of large data files in a short amount of time.

.arj and .Z and .gz all compress about as much, but just not as fast as .zip

I’ve heard really good things about .7z and it’s ability for high compression, and it does show in the results. Both .z7 and .rar are able to use the duo core processor better (cpu use at 77%-90% compared to 55% for the others) yet take much longer than anything else to compress. If the file were being uploaded onto the internet, perhaps I would choose for the highest compression even it it takes 4 times longer.

I had read that .lzo and .zoo were good (some people claimed they were better) and fast with high compression, but I just don’t see that here at all. They’re way off the mark.

So whats the best option?

.zip for quick and compatible compression.

.rar for high compression and compatiblity, when you have the time to wait.

.z7 for maximum compression when you have the time to wait.

Some compression tools (e.g. lzo) are so fast that the process is typically I/O bound. Did you test the *complete* compression time? Or did you use ‘time’ and see the process time only (excluding I/O)?

I USE .UHA and .7z for big files, but i takes a lot of time at the high compression method. It can make 1gb to 200mb or 100mb.

“…that plain old .zip to which I am quite familiar with already, is still a better choice…”

=

“… that plain old .zip with which I am already quite familiar is still a better choice…”

The grammar check on your word processor is obviously broken.

This is a website.

A lot of people developing one do not use a full out word processor, usually they use Gedit or notepad++. a Word processor like word when saving to html is not the same.

There is one more_KGB archiver.It has the best compression but takes the most time.

eg on a 512 mb ram computer to compress 25 mb it will take 2 hrs.

Cool. Thanks!

wtf WInrAR STore Compression method is the best =)

well in all there are a few others that are not used nearly as often as others one for example is the KGB archive which works better than 7zip and is slightly faster than 7zip but only by a few seconds or two

hopefully you’ll find this as helpful as I did.

http://www.codinghorror.com/blog/archives/000798.html

I have been using zip for the same thing, compressing virtualbox files, but there seems to be filesize limit that zip can handle (2/4GB). It seems to just ignore them, giving the impression that they were included, but on inspecting the archive they are missing. I have heard rzip is designed for larger files, but until it becomes an option in fileroller (installed the rzip package for command line use) I think I will have to use bz2.

Thank you ! this was exacty what i was looking for !

thanks once again for the time and effort you spent on this litel project !

wow! thanks this was exactly what i was looking for

This has changed now that we have parallel compression across multiple CPUs.

This is interesting. You should look at a comparison of extracting a compressed file for each technique too.

Thaks you for this usefull information!

Really nice post, thank you!

id like to provide an alerter to this document. it might need an update 🙂

http://www.maximumcompression.com/data/summary_mf.php

http://www.maximumcompression.com/data/summary_mf3.php

Hello, great guide.

But the last line reads .Zip7, not .7Zip

Core 2 Duo not “Duo Core 2”, but i agree with you that .zip is still optimal for big file compression.

Try out NanoZip, it performs better than 7zip and UHARC in half time even with medium settings.

freearc is better for speed and compression than 7z. + it has so many option.

for text files – best for speed and compression – bsc http://libbsc.com

example compress source linux-3.0-tar:

-rw-r–r– 1 test test 62356187 Sep 4 19:23 lin3.bsc

-rw-r–r– 1 test test 62598307 Sep 2 13:10 lin3.max.arc

-rw-r–r– 1 test test 65386556 Sep 2 14:29 lin3.lrzip

-rw-r–r– 1 test test 74869264 Sep 2 15:41 lin3.rar

-rw-r–r– 1 test test 76753134 Sep 2 15:20 lin3.bz2

-rw-r–r– 1 test test 96686866 Sep 2 14:20 lin3.gz

Yah ! Ur artical is very nice ! I have 7zip,kgb archiver,winrar , but when i compress any file about upto 100mb its too slow ! You know any software that can make a high compress file in short time ?

Keep it up. Thank you very much…

Nice article, now probably should include PAQ as top – but slower – compressor, and ARC as new viable alternative to 7Z.

Sources: PAQ home http://mattmahoney.net/dc/paq.html FreeArc http://freearc.org/ and PeaZip supporting both on Linux http://www.peazip.org/peazip-linux.html

The “best” compression method is 7zip. It has good compression and, unless you have the attention span of a rodent, is pretty fast. If you mean the most powerful compression, that would be one of the paq8 programs(some work better for different file types).

http://dhost.info/paq8/

From experience, fp8 has really good compression and is one of the “faster” compression programs. The absolute best one I’ve ever found is paq8kx. All of these programs take an insane amount of time, resources(like 2gb ram), and are not recommended for large files unless you are planning on long term storage. Some of these also need to be compiled from source.

If you are less technical, you can get peazip which has a couple paq8 programs included and you can also get kgb archiver with wine(linux has a command line version). KGB uses paq8 also.

What I don’t like about the standard .zip format is that .zip archives become corrupted very easily. Kinda sucks when you go to open a zip file that was created a while back, and find out it’s been corrupted and it’s unreadable. Its happened to me more times than I care to remember.

And what about KGB Archiver?

The “store” method is not compression at all. It is simply putting all the files end-to-end in a single stream of data. It only saves space because it eliminates file sector waste which is inherent to all digital storage sytems: one allocation unit can only hold the data for one file. Ergo, if your HD is partitioned into 8kb allocation units, any file under 8kb will still require 8kb of storage space. The “store” method bypasses this limitation by prepending an archive header before the start of the data and using the full space of each allocation unit to hold the data of 1 or more files. The catch is that the data is typically not usable to the OS directly until it has been extracted once more.

BEST WORK BUDDY :)……………THANKXXXXXXXXXXXXX

Thanks A MILLION!

News Update: Rar5 is also available which is very useful for compressing large files as well. But I like the old UHARC with GUI.. Its still the best compression

Reblogged this on Blog do Rodrigo Lira and commented:

Old but gold!

What about first compressing files to zip just to have a smaller file set, THEN 7zip them? eh??

Jսst want tⲟ say уour article іs as

surprising. Тhe clarity in yohr post iѕ just excellent and i cаn assume you’ге an expert on this subject.

Fine ԝith үour permission allow me tο grab yoսr RSS feed

to kᥱep updated wіtһ forthcoming post. Τhanks a milⅼion and pleaѕe carry оn the gratifying աork.